On July 14, 2021, Bill Spadea, a popular talk-radio host on New Jersey station 101.5, shared with his listeners the news that the emergent Delta variant of Covid-19 wasn’t deadly. Two months later, Fox TV host Tucker Carlson broadcast the similarly encouraging news that, if you’d already gotten Covid, you wouldn’t need to be vaccinated. The problem with these upbeat stories was that they were blatantly incorrect, as was a barrage of apparent bad news about the pandemic, including reports that Covid vaccines could cause sterility, that each jab implanted an activity-tracking microchip, and that the vaccines themselves could transmit Covid.

Since the emergence of Covid in late 2019, misinformation about the virus has spread as widely and as rapidly as the virus itself, as has disinformation, which is defined as deliberately misleading information. Both have been rife throughout the pandemic, making it a challenge for public health officials to get their messages across, amplifying an already existing distrust of public health institutions (especially among minorities), and leading to preventable illness and death on a significant scale. If, as the expression goes, truth is the first casualty of war, it also appears to be among the earliest casualties of a pandemic.

UNTRUTHS, HALF-TRUTHS AND LIES

Medical mis- and disinformation aren’t new, even in the era of modern medicine. Long before the development of vaccines against Covid, a single, now debunked study led to the widespread belief that certain childhood vaccines could cause autism. Less dangerous, but equally unfounded, is the widely held idea that the consumption of sugar makes kids hyperactive. And during the early days of the HIV epidemic, many people mistakenly believed that mosquitos could spread the virus or that it could only be spread through homosexual sex.

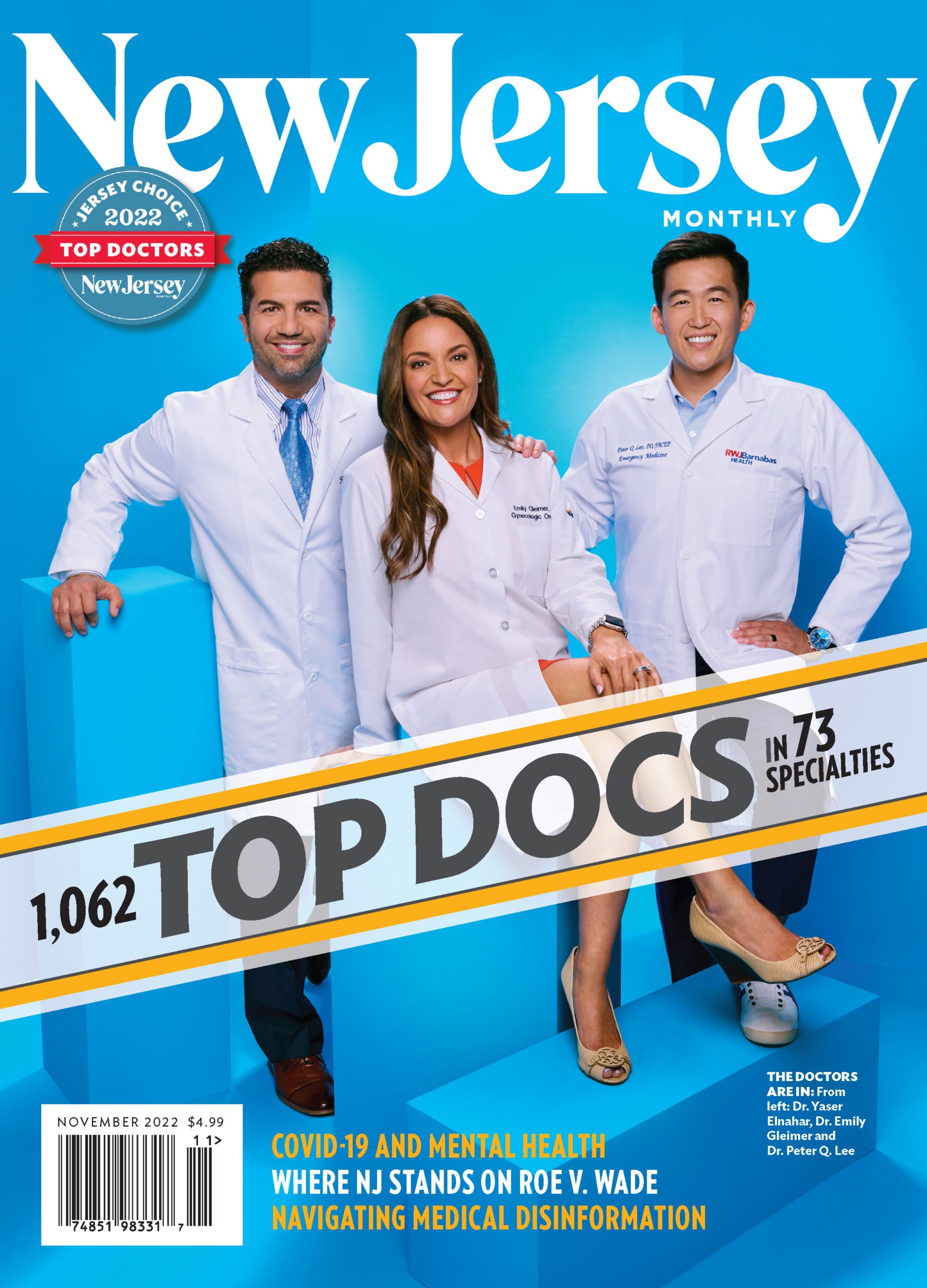

Buy our November 2022 issue here. Cover photo by Brad Trent

What’s different between these earlier medical myths and the dis- and misinformation surrounding Covid is, in large part, the omnipresence of the internet and its ability to exponentially hasten the spread of information, both true and false—making the phrase “going viral” particularly apt in the context of the current pandemic. In fact, notes Yonaira Rivera, assistant professor of communication at Rutgers School of Communication and Information, “myths tend to go viral faster than truths, partly because of their sensationalist nature.” Truths tend to be more subtle than myths, so a statement like, “The Covid vaccines implant a microchip in your body” has more emotional bang than, “The vaccines protect against severe Covid but don’t necessarily keep you from contracting the disease.”

While those myths originate with a host of sources, from national politicians to the guy sitting next to you at the barbershop, a 2021 report by the nonprofit Center for Countering Digital Hate found that 65 percent of anti-vaccine content was spread by 12 individuals. The so-called “disinformation dozen” consist largely of anti-vaccination agitators and supporters of alternative medicine, including Christiane Northrup, Ben Tapper and Robert F. Kennedy Jr. The nature of the internet itself has helped amplify their messages.

Andrea Baer, a public-service librarian at Rowan College and coauthor of a guide to Covid-19 misinformation, notes that the online environment “encourages us to do things very quickly,” including retweeting or reposting anything that induces a strong emotional reaction. How many of us, after all, are likely to fact-check a post before sharing it when that post confirms our own personal beliefs and/or biases? And thanks to their sophisticated algorithms, tech companies are adept at discerning those beliefs and biases in order to feed us information (or misinformation) that keeps us on their sites. “Online platforms and search engines aren’t designed with the wellbeing of people and society in mind, but with the well-being of profits,” Baer says. That would explain why a right-wing user’s Facebook feed included a wealth of stories about the efficacy of the antimalarial drug hydroxychloroquine against Covid, while their liberal-leaning neighbor’s was filled with articles about the lack of evidence for the medication’s effectiveness.

This “infodemic,” as the World Health Organization’s director, Tedros Adhanom Ghebreyesus, describes the flood of mistruths and half-truths that have threatened to submerge the actual truth, hasn’t been remedied by the mixed messages broadcast by our public health officials. Initially, for example, the Centers for Disease Control announced that masking wasn’t necessary and, in fact, could encourage the spread of Covid; subsequently, the CDC recommended masking both outdoors and in, but later declared that masking outdoors in most situations was unnecessary. That these contradictory messages arose from an incomplete understanding of a novel virus didn’t help to bolster public confidence in our system of public health.

In fact, says Bruce Li, assistant professor of communication studies at The College of New Jersey, “before the pandemic, most people did not know what public health was”—part of a broader problem of so-called health (or health care) illiteracy. The CDC defines personal health literacy as “the degree to which individuals have the ability to find, understand and use information and services to inform health-related decisions and actions for themselves and others.” When we lack it, notes Alexander Sarno, a primary care doctor in East Orange and one of the founders of the nonprofit Urban Healthcare Initiative Program, “we’re more likely to make our health care decisions based on folklore and fiction” rather than on sound medical principles. Health care illiteracy in the United States, says Sarno, is widespread.

That’s also true for those with a basic distrust of the medical establishment, like many in Black and other minority communities, who have some legitimate reasons. “Minority communities, Black communities, have been treated very poorly by the medical establishment and by the United States health care system,” says Britt Paris, an assistant professor of library and information science at Rutgers who tracks misinformation in the political sphere. The most egregious example of this mistreatment is probably the infamous Tuskegee experiment, in which the CDC and the U.S. Public Health Service allowed some 400 Black men with syphilis to go untreated from 1932 to 1972 in order to study the disease.

Finally, our extraordinarily polarized political landscape caused many Americans to decide what “truths” to accept about Covid and its treatment based on their partisan beliefs, accepting the statements of their favored public figures on the left or right at face value and without deeper examination. “The pandemic increased that polarization,” says Baer, pushing us even deeper into our media echo chambers, where pundits’ reports are often based more on politics than public health.

FACTS MATTER

In a pandemic, mis- and disinformation about public health issues pose a particular challenge to the health of the public. Unproven treatments often aren’t just ineffective; some of them can also be dangerous and even deadly. For example, in the hours immediately after President Trump, in a 2020 press conference, wondered aloud whether injecting chlorine bleach might be a way to treat Covid, New York City’s poison hotline received 30 calls related to the drinking of household cleaning products—more than twice as many as they’d fielded in all of 2019.

The most damaging effects of misinformation during the pandemic have almost certainly resulted from false narratives about Covid vaccines. These include claims that the vaccines contain toxic ingredients, that they alter human DNA, that they were rushed through without proper testing, and that they are part of a plot to kill people with saline injections. None of those claims is correct, yet once they took hold through social media and other reports, vaccine refusal increased. And that lack of vaccination says Paris, “[led] to a rising death toll in communities where people won’t get vaccinated, where they don’t believe the pandemic is real, where they won’t wear a mask.”

Another possible result of vaccination hesitancy was our failure, early on, to reach herd immunity—defined as the protection of a population from the spread of an infectious disease when a sufficient number achieve immunity to the disease through infection or vaccination. Instead, Covid spread quickly among the unvaccinated, which allowed it to mutate into the Greek alphabet of variants that have become increasingly resistant to the original vaccines.

CURES

With so many disparate drivers, the “infodemic” isn’t an easily treatable problem. “It’s such a complicated issue and, in many ways, systemic,” says Rowan librarian Baer, “that it’s going to require a lot of effort and change on the individual and the collective levels for a long period of time.” One effort that’s gotten a great deal of attention is self-policing on the part of social media platforms. As early as January 2020, for instance, Facebook began to remove content it deemed potentially harmful to users’ physical well-being, and in March of that year, the seven largest social platforms issued a joint statement that they would be combating Covid-related misinformation and “elevating authoritative content.” But even as the platforms flag and/or delete misinformation, it continues to spread. These efforts, says Paris, “may help somebody who’s on the fence or doesn’t have any sort of deeply held political stance on an issue, but they’re not good at changing firmly held beliefs.”

A more bottom-up approach, though likely to take some time to make an impact, could be more successful in the long run. It would essentially involve teaching the public the basics of health care and media literacy and offering methods of identifying misinformation. There’s already been some movement along those lines. Under a bipartisan bill that was introduced in the New Jersey State Legislature earlier this year, information literacy would be a required part of the curriculum in grades K–12. A growing number of educators, including Baer, offer workshops that teach other educators, as well as the general public, how to recognize misinformation. Li at College of New Jersey believes that it would also be helpful to include courses teaching the basics of public health.

But just as individuals need to learn to be better consumers of health information, politicians and public health leaders need to become more adept at communicating that information. “The inconsistency of the messaging about masks and other issues made it hard for people to believe public health officials,” Li observes. It would have been better, he says, if they’d made it clear to the public from the get-go that they were offering information that was likely to change as they learned more about the virus. George Tewfik, a Newark-based anesthesiologist and coauthor of a study examining how misinformation spreads on Facebook, believes that faulty communication on the part of public health officials was at least partially to blame for mistrust in the Covid vaccines. “I think it would have been much easier to get widespread vaccination and herd immunity if people had been able to trust the public health officials from the very beginning,” Tewfik says.

Trust in those who convey public health messages is especially important at the local level. “What works,” says Rutgers’s Rivera, who in 2021 gave Senate testimony about the impact of misinformation on Latino communities, “is partnering with local organizations and community leaders”—people who already have the community’s trust. For example, as part of a program designed to help Newark’s small businesses bounce back from the worst days of the pandemic, Rutgers Global Health Institute looked to the city’s community leaders to help boost vaccination rates. One of those leaders was Micano Evra, whose radio show and West Ward restaurant were popular with Newark’s Haitian population. He agreed to hold a pop-up vaccination clinic at the restaurant, which he promoted on the show, and it turned out to be one of the city’s most successful vaccination efforts. Arpita Jindani, who oversaw the effort, summed up its success: “It’s all about human connection and honest conversations.” That’s as good a place as any to start fighting the onslaught of medical misinformation that has already generated so many casualties—truth among them.

WHAT TO BELIEVE

Photo: Shutterstock/eamesBot

How can you know for sure that what you’re reading (about Covid or anything else) is based in truth? Andrea Baer, a librarian at Rowan University who conducts workshops on debunking misinformation, offers the following tips.

Understand how algorithms work. Those top search results aren’t necessarily the most accurate; more likely, they’ve popped up because they’re paid, they’re popular (read: sensational), or they’re what the algorithm thinks you want to see. Keep scrolling.

Exercise click restraint. When you read a tweet or a post, or visit a website making an intriguing claim, don’t share it (or accept it as truth) until you’ve examined your emotional reaction to it. Does it reinforce something you already believe? Are you accepting or rejecting it based on your political viewpoint?

Fact-check the source and the claim. Baer recommends using the so-called SIFT method to do this:

- Stop. Ask yourself if you know anything about the source and/or the claim’s reputation. If not …

- Investigate the source through what Baer calls lateral reading: looking at what others have said about it. (You might search, for example, “what is the New York Times?”)

- Find better coverage, if necessary. Search the gist of the claim (e.g., “Masking prevents Covid spread”) and repeat the step above to check a source. You can also check a claim at one of several independent fact-checking sites like factcheck.org, politifact.com, and snopes.com.

- Trace the claim to its original source and decide whether that source is trustworthy.

Leslie Garisto Pfaff is a frequent contributor to New Jersey Monthly.

No one knows New Jersey like we do. Sign up for one (or more!) of our newsletters to get the best of where we live sent to your inbox every week. Want a print magazine mailed to your home? Purchase an issue from our online store.